MARS is a Manycore Architecture and Systems Research group exploring research projects to enable a virtuous cycle of ML techniques for advancing hardware designs spanning edge devices to cloud, which will empower further advances in ML (a.k.a. ML for ML)problems to augment system design in the space of sustainable computing, hardware-software co-design and applied ML. Our research spanning multiple layers of the machine learning and manycore SoC design and test. Our group is a collaboration between researchers at WSU, eLAb UWisc-Madison, ASU, and Industry Partners at the Washington State University.

Research

Fault-Tolerant PIM accelerator

A novel fault-tolerant framework called FARe that enables on-device GNN training using ReRAM-based architectures..

Dynamic Resource Management

Machine learning based dynamic resource management policies for mobile, large-manycore systems and PIM-based architectures

Chiplet-based Architecture Design

A novel server scale 2.5-D manycore architecture called SWAP for deep learning (DL) applications.

DNN Training and Graph Learning at the Edge

In-memory acceleration of DNN training workloads.

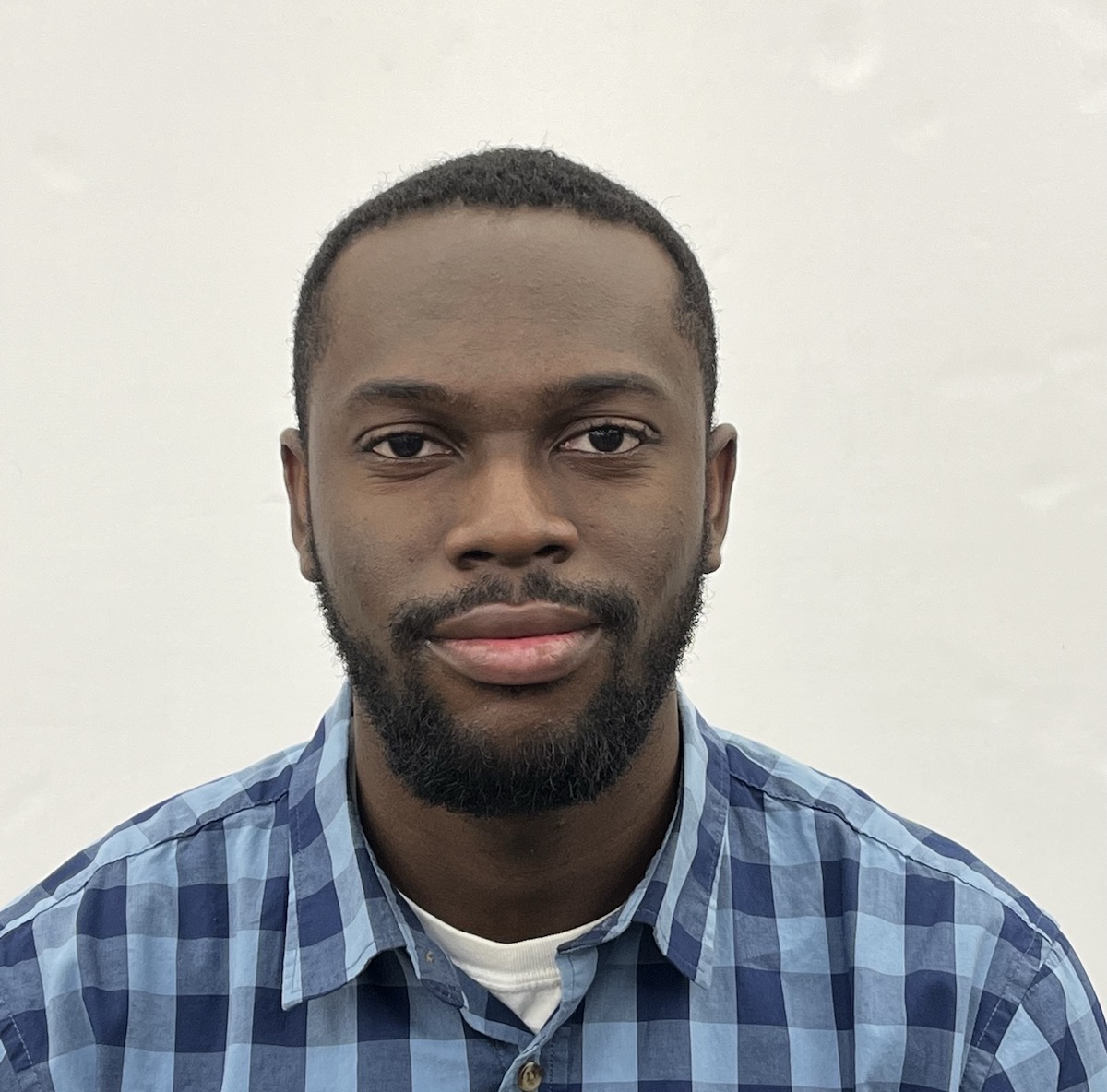

Hey, I am Partha, Director at the MARS Lab

Partha Pratim Pande is a professor and holder of the Boeing Centennial Chair in computer engineering at the school of Electrical Engineering and Computer Science, Washington State University, Pullman, USA. He is currently the director of the school. His current research interests are novel interconnect architectures for manycore chips, on-chip wireless communication networks, heterogeneous architectures, and ML for EDA. Dr. Pande currently serves as the Editor-in-Chief (EIC) of IEEE Design and Test (D&T). He is on the editorial boards of IEEE Transactions on VLSI (TVLSI) and ACM Journal of Emerging Technologies in Computing Systems (JETC) and IEEE Embedded Systems letters. He was the technical program committee chair of IEEE/ACM Network-on-Chip Symposium 2015 and CASES (2019-2020). He also serves on the program committees of many reputed international conferences. He has won the NSF CAREER award in 2009. He is the winner of the Anjan Bose outstanding researcher award from the college of engineering, Washington State University in 2013. He is a fellow of IEEE.

Mission

The rapid advance in ML models and ML-specific hardware makes it increasingly challenging to build efficient and scalable learning systems that can take full advantage of the performance capability of modern hardware and runtime environments. Today's ML systems heavily rely on human effort to optimize the training and deployment of ML models on specific target platforms. Unlike conventional application domains, learning systems need to address a continuously growing complexity and diversity in machine learning models, hardware backends, and runtime environments. The Computer Architecture and Machine Learning Research Lab at Washington State University (WSU) is a distinguished center of excellence committed to pushing the boundaries of knowledge in the fields of chiplet-based systems, manycore architecture design, resource management, Processing-in-Memory (PIM) and fault-tolerant systems. With a relentless pursuit of groundbreaking research, a culture of innovation, and a dedication to cultivating the next generation of computer architects and machine learning experts, our lab seeks to shape the forefront of these critical areas of study. Our lab is at the forefront of pioneering research on chiplet-based systems, where we explore and develop novel methodologies to revolutionize computer system design. By leveraging the advantages of chiplets—discrete modular components that can be independently designed and integrated into a single system—we aim to overcome the limitations imposed by monolithic designs. Through interdisciplinary collaborations, we strive to unlock new avenues of system-level integration, fostering enhanced performance, scalability, and energy efficiency. Manycore architecture design is the core focus of our lab's research endeavors. As computing systems continue to embrace parallelism, we investigate and engineer scalable architectures that can accommodate a multitude of processing cores while ensuring optimal resource utilization. Our research encompasses the intricate interplay of interconnectivity, memory hierarchy, and workload distribution to facilitate highly parallel and high-performance computing.